|

|

|

|

|

|

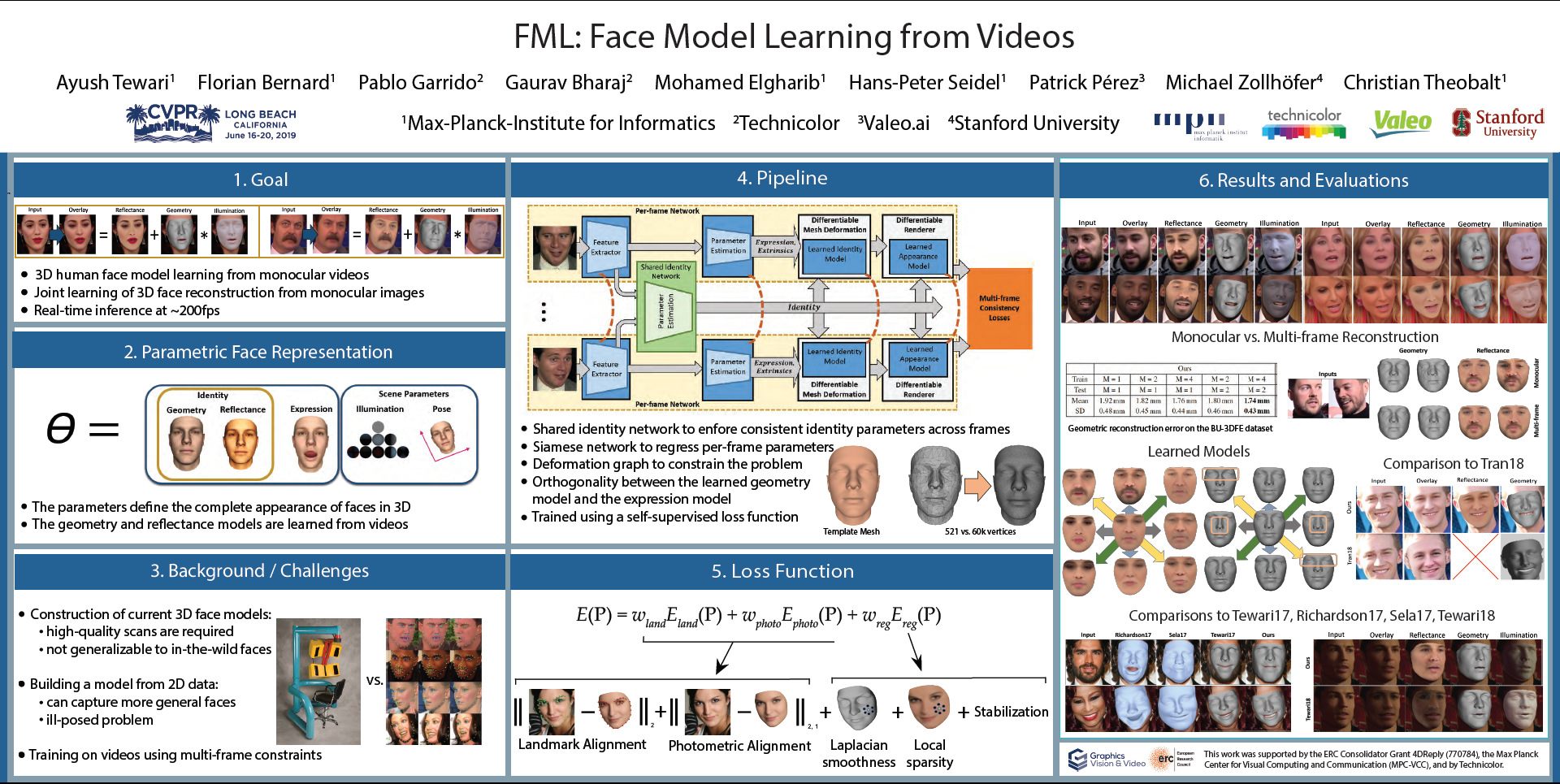

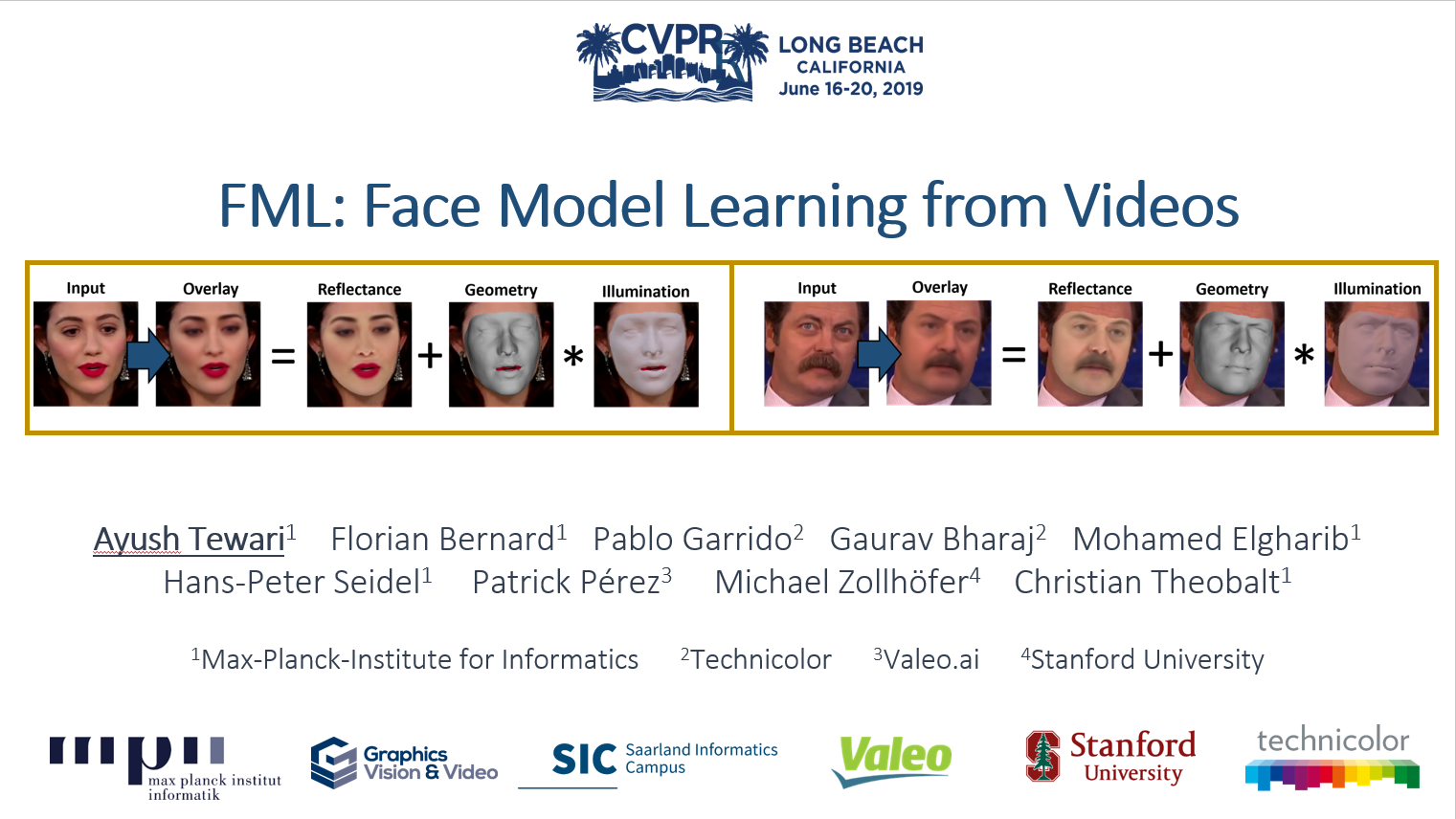

FML: Face Model Learning from Videos

| A. Tewari 1 | F. Bernard 1 | P. Garrido 2 | G. Bharaj 2 | M. Elgharib 1 | H-P. Seidel 1 | P. Perez 3 | M. Zollhöfer 4 | C.Theobalt 1 |

| 1MPI Informatics, Saarland Informatics Campus | 2Technicolor | 3Valeo.ai | 4Stanford University |

| Full Video | More Qualitative Results | Poster | Talk |

|

|

|

|

|

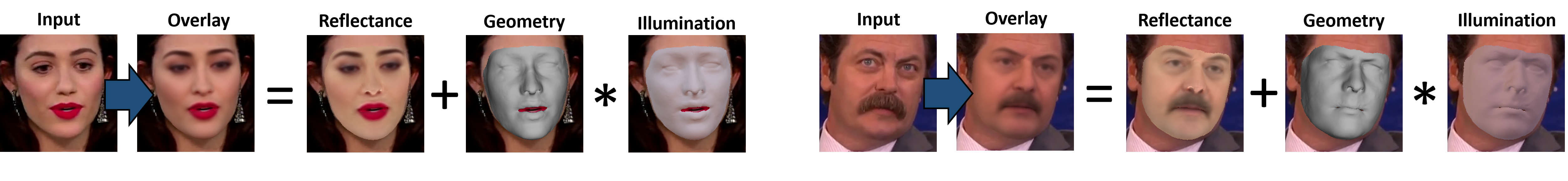

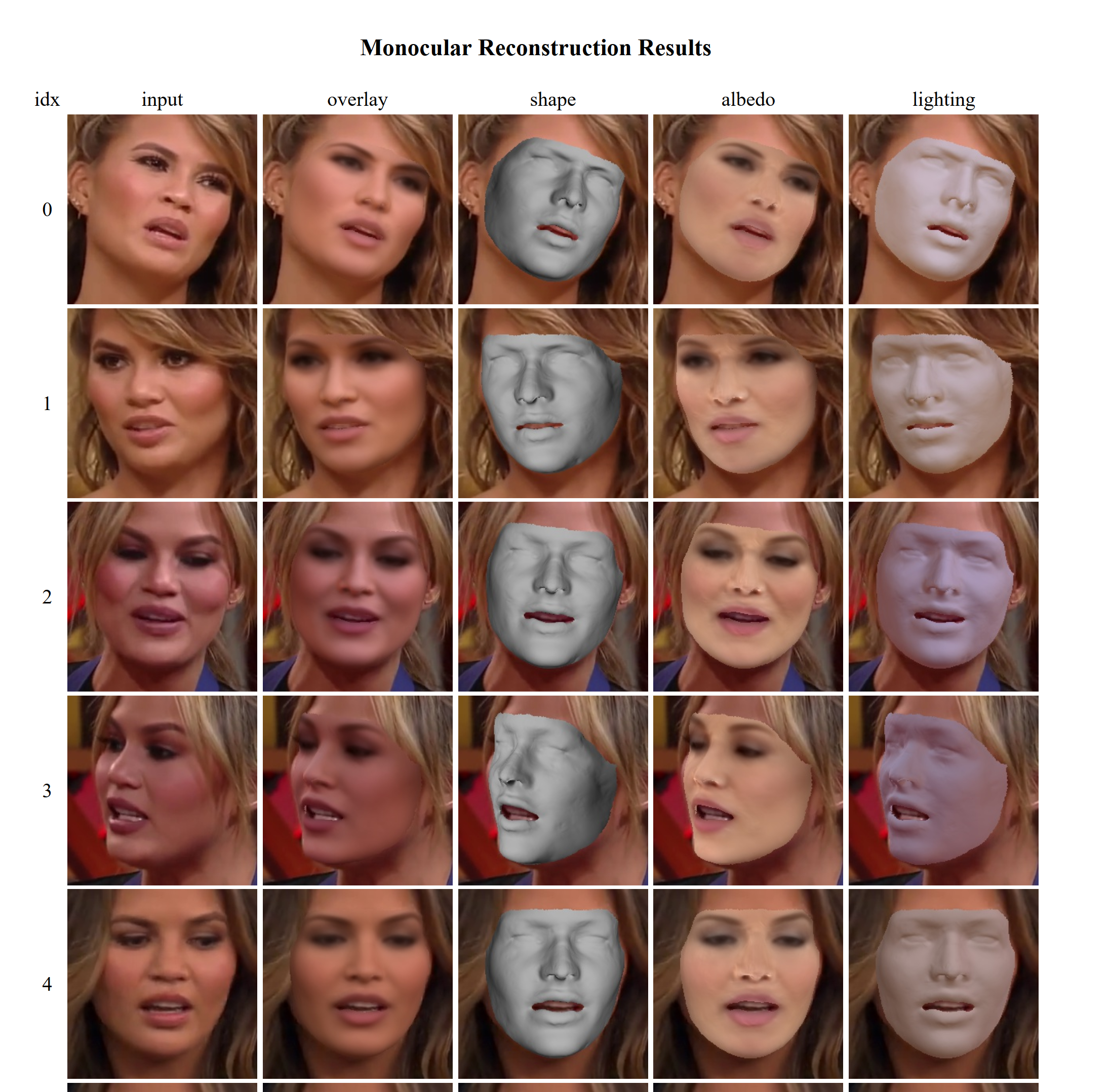

Monocular image-based 3D reconstruction of faces is a long-standing problem in computer vision. Since image data is a 2D projection of a 3D face, the resulting depth ambiguity makes the problem ill-posed. Most existing methods rely on data-driven priors that are built from limited 3D face scans. In contrast, we propose multi-frame video-based self-supervised training of a deep network that (i) learns a face identity model both in shape and appearance while (ii) jointly learning to reconstruct 3D faces. Our face model is learned using only corpora of in-the-wild video clips collected from the Internet. This virtually endless source of training data enables learning of a highly general 3D face model. In order to achieve this, we propose a novel multi-frame consistency loss that ensures consistent shape and appearance across multiple frames of a subject's face, thus minimizing depth ambiguity. At test time we can use an arbitrary number of frames, so that we can perform both monocular as well as multi-frame reconstruction.

|

|

| Paper | Supplemental |

@InProceedings{tewari2019fml,

|