LIME: Live Intrinsic Material Estimation

CVPR 2018 Spotlight Oral

Abstract

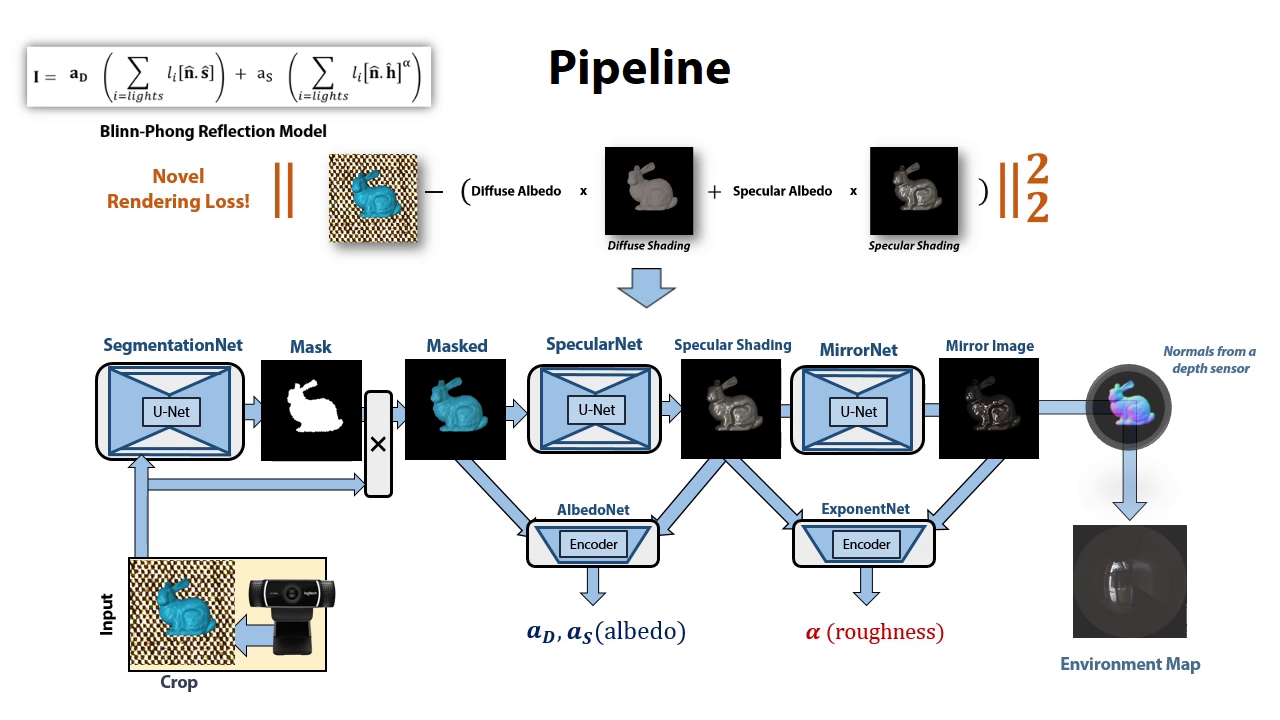

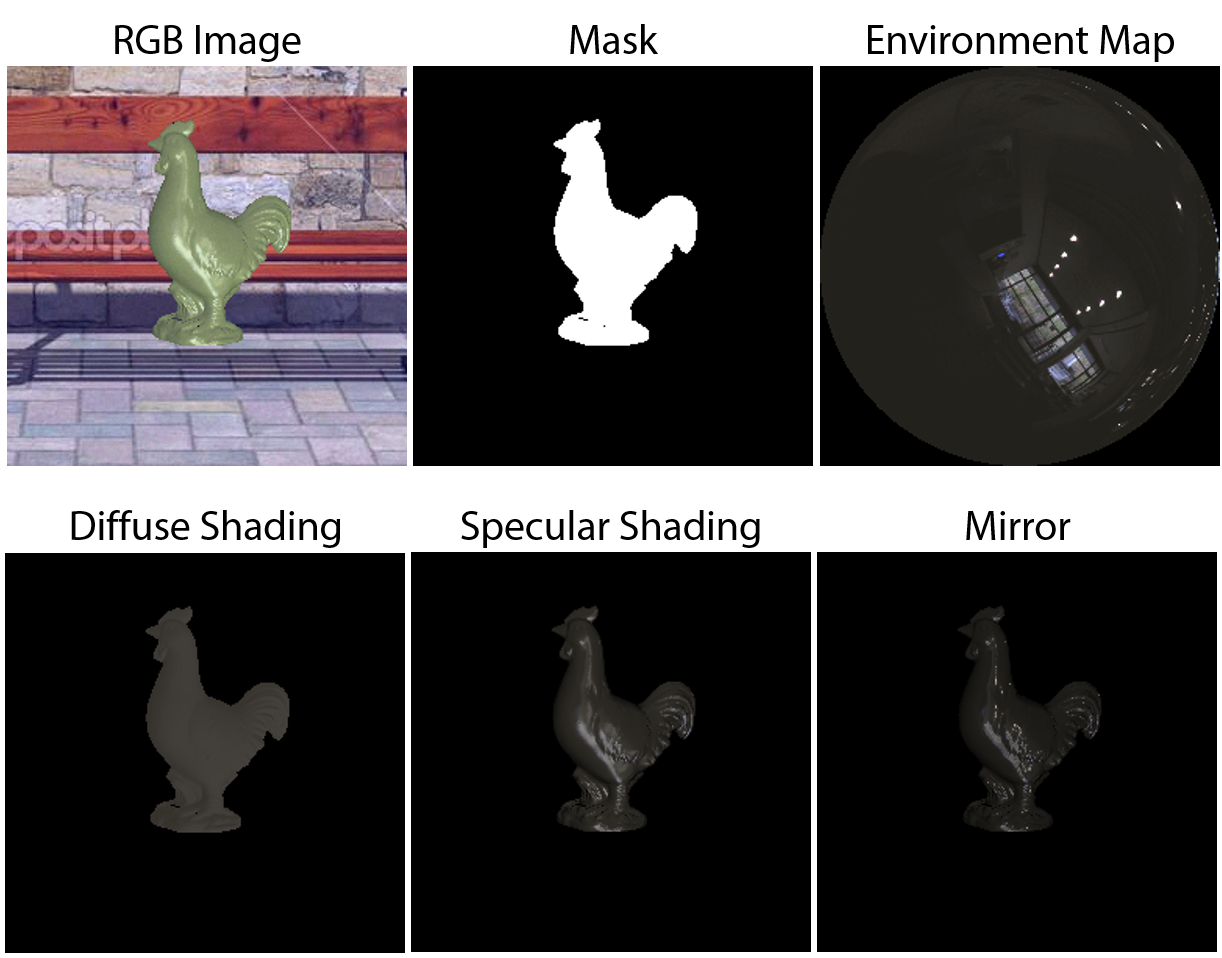

We present the first end-to-end approach for real-time material estimation for general object shapes that only requires a single color image as input. In addition to Lambertian surface properties, our approach fully automatically computes the specular albedo, material shininess, and a foreground segmentation. We tackle this challenging and ill-posed inverse rendering problem using recent advances in image-to-image translation techniques based on deep convolutional encoder-decoder architectures. The underlying core representations of our approach are specular shading, diffuse shading and mirror images, which allow to learn the effective and accurate separation of diffuse and specular albedo. In addition, we propose a novel highly efficient perceptual rendering loss that mimics real-world image formation and obtains intermediate results even during run time. The estimation of material parameters at real-time frame rates enables exciting mixed-reality applications, such as seamless illumination-consistent integration of virtual objects into real-world scenes, and virtual material cloning. We demonstrate our approach in a live setup, compare it to the state of the art, and motivate its effectiveness through quantitative and qualitative evaluation.

Downloads

[© Copyrights by the Authors, 2018. This is the author's version of the work. It is posted here for your personal use. Not for redistribution. The definitive version will be published in IEEE Xplore]

Citation

@inproceedings{Meka:2018,

author = {Meka, Abhimitra and Maximov, Maxim and Zollhoefer, Michael and Chatterjee, Avishek and Seidel, Hans-Peter and Richardt, Christian and Theobalt, Christian},

title = {LIME: Live Intrinsic Material Estimation},

booktitle = {Proceedings of Computer Vision and Pattern Recognition ({CVPR})},

url = {http://gvv.mpi-inf.mpg.de/projects/LIME/},

numpages = {11},

month = {June},

year = {2018}

}

Acknowledgments

This work was supported by EPSRC grant CAMERA (EP/M023281/1), ERC Starting Grant CapReal (335545), and the Max Planck Center for Visual Computing and Communications (MPC-VCC).