Real-time Global Illumination Decomposition of Videos

*Authors contributed equally to this work

ACM Transactions on Graphics 2021

(To be presented at SIGGRAPH '21)

Abstract

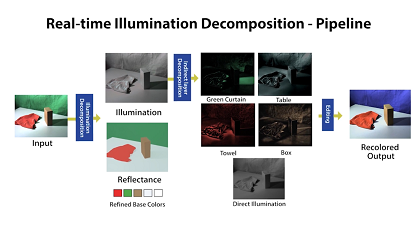

We propose the first approach for the decomposition of a monocular color video into direct and indirect illumination components in real time. We retrieve, in separate layers, the contribution made to the scene appearance by the scene reflectance, the light sources and the reflections from various coherent scene regions to one another. Existing techniques that invert global light transport require image capture under multiplexed controlled lighting, or only enable the decomposition of a single image at slow off-line frame rates. In contrast, our approach works for regular videos and produces

temporally coherent decomposition layers at real-time frame rates. At the core of our approach are several sparsity priors that enable the estimation of the per-pixel direct and indirect illumination layers based on a small set of jointly estimated base reflectance colors. The resulting variational decomposition problem uses a new formulation based on sparse and dense sets of non-linear equations that we solve efficiently using a novel alternating data-parallel optimization strategy. We evaluate our approach qualitatively and quantitatively, and show improvements over the state of the art in this

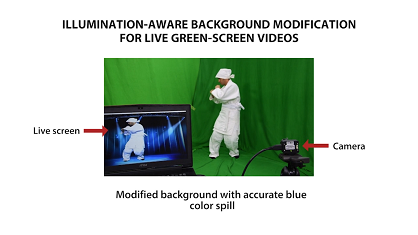

field, in both quality and runtime. In addition, we demonstrate various realtime appearance editing applications for videos with consistent illumination.

Downloads

[© Copyrights by the Authors, 2021. This is the author's version of the work. It is posted here for your personal use. Not for redistribution.]

Citation

@inproceedings{Meka:2021,

author = {Meka, Abhimitra and Shafiei, Mohammad and Zollhoefer, Michael and Richardt, Christian and Theobalt, Christian},

title = {Real-time Global Illumination Decomposition of Videos},

journal = {ACM Transactions on Graphics},

url = {http://gvv.mpi-inf.mpg.de/projects/LiveIlluminationDecomposition/},

volume = {1},

number = {1},

month = {January},

year = {2021},

doi = {10.1145/3374753},

}}

Acknowledgments

We thank the participants of the video sequences used in this work, particularly Jiayi Wang, Lingjie Liu and Edgar Tretschk. We also thank Carroll et al. [2012] and Bonneel et al. [2014] for providing their results in their supplementary material for comparisons. We also thank the authors of 'Intrinsic Images in the Wild' [Bell et al. 2014] for making their dataset available to the public. Abhimitra Meka and Christian Theobalt were supported by the ERC Consolidator Grant 4DRepLy (770784), Michael Zollhoefer was supported by Max Planck Center for Visual Computing and Communications (MPC-VCC) and Christian Richardt was supported by RCUK grant CAMERA (EP/M023281/1) and EPSRC-UKRI Innovation Fellowship (EP/S001050/1).