Graphics, Vision & Video

Capturing Relightable Human Performances under General Uncontrolled Illumination

| Guannan Li1,2 | Chenglei Wu 3,4 | Carsten Stoll 3 | Yebin Liu 1 | Kiran Varanasi 3 | Qionghai Dai 1 | Christian Theobalt 3 |

| 1 Department of Automation, Tsinghua University | 2 Graduate School at Shenzhen, Tsinghua University | 3 MPI for Informatics | 4 Intel Visual Computing Institute |

| Abstract | Demo Results | Bibtex |

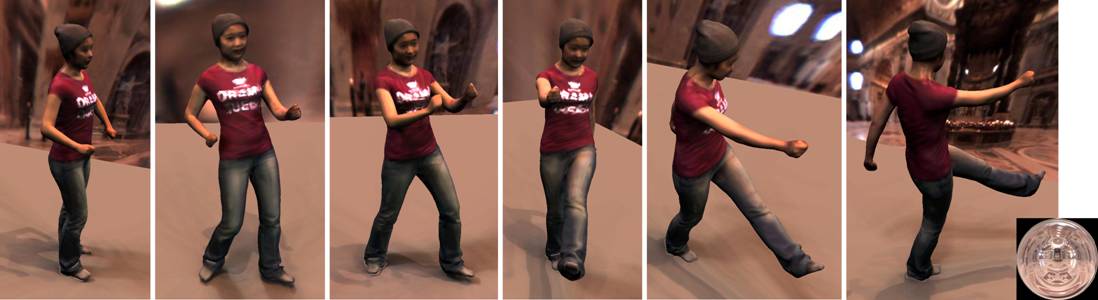

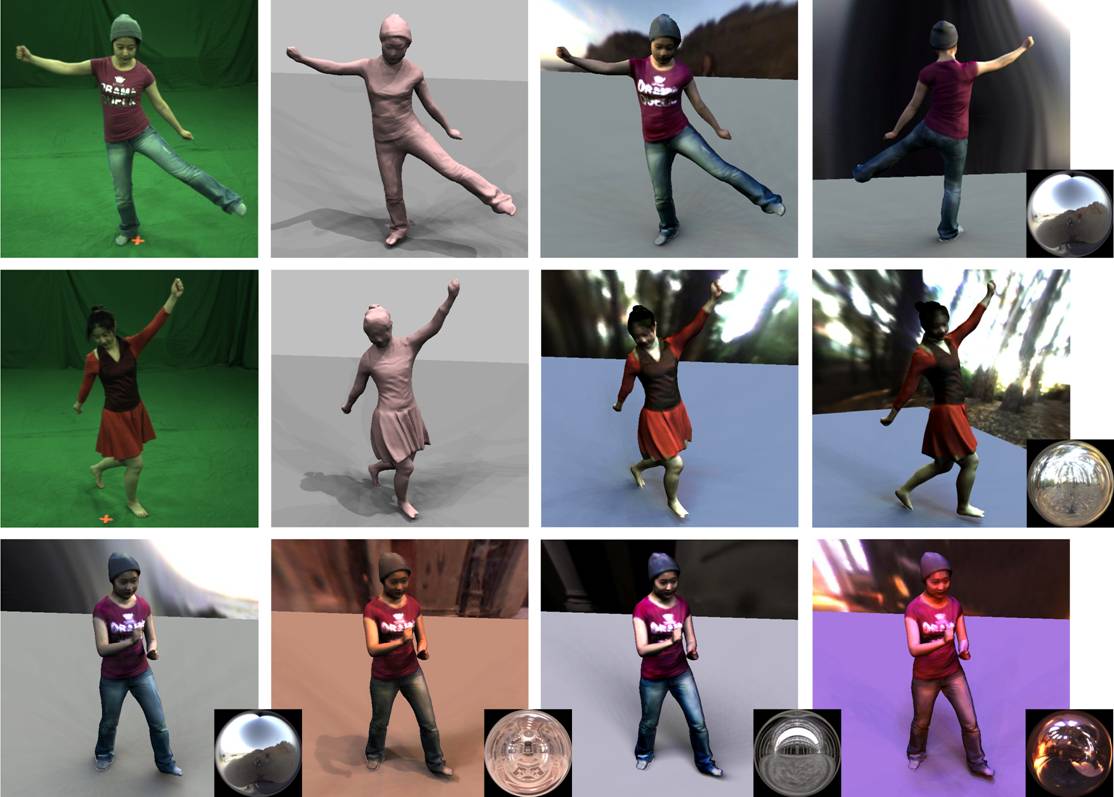

We present a novel approach to create relightable free-viewpoint human performances from multi-view video recorded under general uncontrolled and uncalibated illumination. We first capture a multi-view sequence of an actor wearing arbitrary apparel and reconstruct a spatio-temporal coherent coarse 3D model of the performance using a marker-less tracking approach. Using these coarse reconstructions, we estimate the low-frequency component of the illumination in a spherical harmonics (SH) basis as well as the diffuse reflectance, and then utilize them to estimate the dynamic geometry detail of human actors based on shading cues. Given the high-quality time-varying geometry, the estimated illumination is extended to the all-frequency domain by re-estimating it in the wavelet basis. Finally, the high-quality all-frequency illumination is utilized to reconstruct the spatially-varying BRDF of the surface. The recovered time-varying surface geometry and spatially-varying non-Lambertian reflectance allow us to generate high-quality model-based free view-point videos of the actor under novel illumination conditions. Our method enables plausible reconstruction of relightable dynamic scene models without a complex controlled lighting apparatus, and opens up a path towards relightable performance capture in less constrained environments and using less complex acquisition setups.

|

|

|

| Paper pdf (14.6M) |

Video avi (206M) |

Presentation not yet available |

@inproceedings{LWSLVDT13,

|