informatik

|

|

Andreas Baak,

Meinard Müller,

Gaurav Bharaj,

Hans-Peter Seidel,

Christian Theobalt A Data-Driven Approach for Real-Time Full Body Pose Reconstruction from a Depth Camera ICCV 2011 [pdf], [bib], [video] |

In recent years, depth cameras have become a widely available sensor type that captures depth images at real-time frame rates. Even though recent approaches have shown that 3D pose estimation from monocular 2.5D depth images has become feasible, there are still challenging problems due to strong noise in the depth data and self-occlusions in the motions being captured. In this paper, we present an efficient and robust pose estimation framework for tracking full-body motions from a single depth image stream. Following a data-driven hybrid strategy that combines local optimization with global retrieval techniques, we contribute several technical improvements that lead to speed-ups of an order of magnitude compared to previous approaches. In particular, we introduce a variant of Dijkstra's algorithm to efficiently extract pose features from the depth data and describe a novel late-fusion scheme based on an efficiently computable sparse Hausdorff distance to combine local and global pose estimates. Our experiments show that the combination of these techniques facilitates real-time tracking with stable results even for fast and complex motions, making it applicable to a wide range of interactive scenarios.

In our experiments, we compare with previous work using the

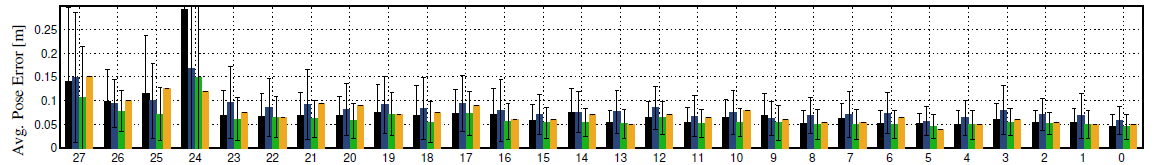

publicly available benchmark data set of the paper [1]. We gain significant improvements in accuracy and robustness while achieving frame rates of up to 100 fps (opposed to 4 fps reported in [1]). The data set contains 28 sequences of ToF data obtained from a Mesa Imaging SwissRanger SR 4000 ToF camera. Additionally, ground truth marker positions obtained from a marker-based motion capture system are provided. By means of an average pose error as defined in the paper, we compare the the results of our algorithm to [1], see the figure below. Here, the green bars depict our results, and the yellow bars the results as reported in [1] (standard deviation bars where not available).

We achieve comparable results for basic motions and perform

significantly better in the more complex sequences 20 to 27.

Only for sequence 24 we perform worse than [1].

Here, the reason is that this sequence contains a 360° rotation around the vertical axis, which cannot be handled by our framework. However, rotations in the range of ±45° can easily be handled by the proposed framework. Below, in addition to a graphical represenation of the error values, we also report on the actual numbers in the following table.

| Sequence ID | Average pose error in meters |

|---|---|

| 27 | 0.107 ± 0.108 |

| 26 | 0.077 ± 0.043 |

| 25 | 0.071 ± 0.056 |

| 24 | 0.149 ± 0.164 |

| 23 | 0.061 ± 0.045 |

| 22 | 0.066 ± 0.044 |

| 21 | 0.061 ± 0.040 |

| 20 | 0.057 ± 0.036 |

| 19 | 0.072 ± 0.045 |

| 18 | 0.055 ± 0.043 |

| 17 | 0.073 ± 0.047 |

| 16 | 0.056 ± 0.038 |

| 15 | 0.053 ± 0.033 |

| 14 | 0.054 ± 0.030 |

| 13 | 0.051 ± 0.030 |

| 12 | 0.064 ± 0.035 |

| 11 | 0.052 ± 0.029 |

| 10 | 0.054 ± 0.030 |

| 9 | 0.053 ± 0.037 |

| 8 | 0.049 ± 0.031 |

| 7 | 0.050 ± 0.031 |

| 6 | 0.050 ± 0.029 |

| 5 | 0.045 ± 0.026 |

| 4 | 0.049 ± 0.030 |

| 3 | 0.055 ± 0.029 |

| 2 | 0.051 ± 0.023 |

| 1 | 0.050 ± 0.029 |

| 0 | 0.045 ± 0.027 |

| [1] | Varun Ganapathi, Christian Plagemann, Daphne Koller and Sebastian Thrun |

| Real Time Motion Capture Using a Single Time-Of-Flight Camera | |

| CVPR 2010 |