GIGA: Generalizable Sparse Image-driven Gaussian Avatars

arXiv 2025

1Max Planck Institute for Informatics, Saarland Informatics Campus

2Saarbrücken Research Center for Visual Computing, Interaction and AI

3Google, Switzerland

arXiv 2025

1Max Planck Institute for Informatics, Saarland Informatics Campus

2Saarbrücken Research Center for Visual Computing, Interaction and AI

3Google, Switzerland

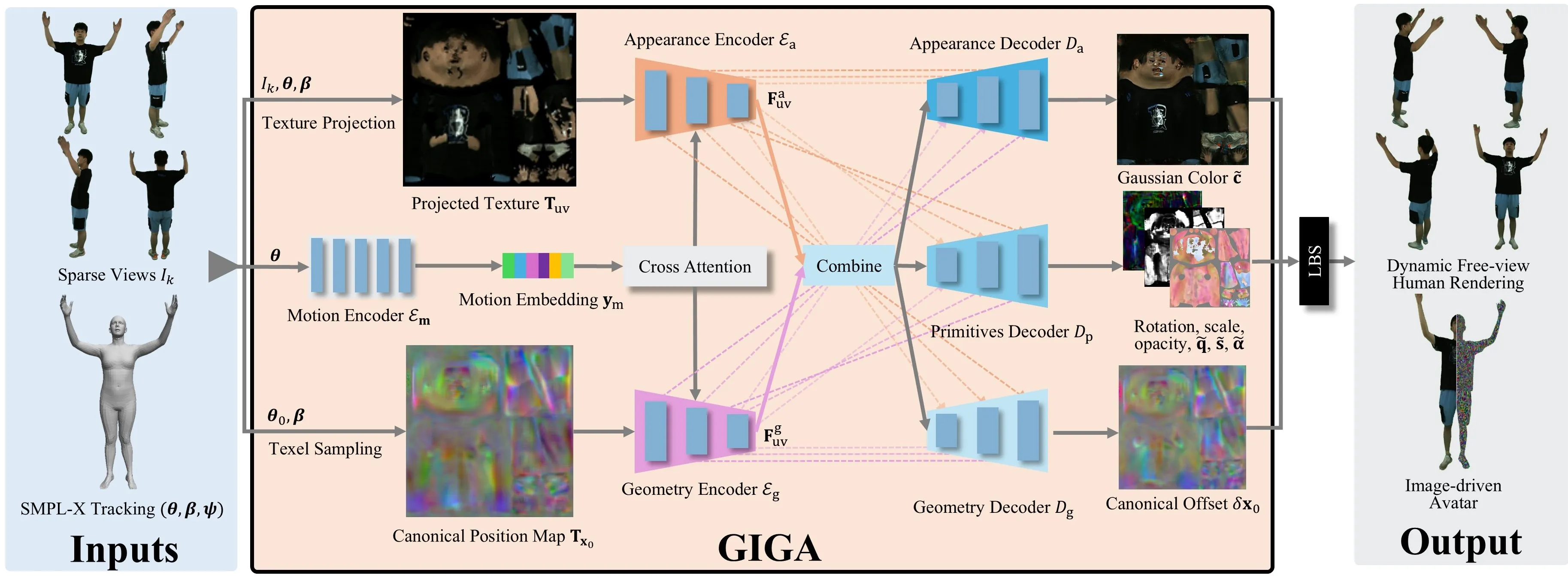

GIGA creates 3D Gaussian avatars from 4 input views and a body template . It computes RGB texture and canonical position maps as character-specific inputs. Separate appearance () and geometry () encoders process these inputs. Both encoders use cross-attention for conditioning on the observed character pose embedding , with motion embedding serving as context and combined encoder outputs () as query. Multiple decoders () generate the final texel-aligned 3D Gaussian avatar, taking into account intermediate featur maps from the encoders, propagated through skip-connections (colored dashed lines). The avatar is articulated with linear blend skinning.

Driving a high-quality and photorealistic full-body human avatar, from only a few RGB cameras, is a challenging problem that has become increasingly relevant with emerging virtual reality technologies. To democratize such technology, a promising solution may be a generalizable method that takes sparse multi-view images of an unseen person and then generates photoreal free-view renderings of such identity. However, the current state of the art is not scalable to very large datasets and, thus, lacks in diversity and photorealism. To address this problem, we propose a novel, generalizable full-body model for rendering photoreal humans in free viewpoint, as driven by sparse multi-view video. For the first time in literature, our model can scale up training to thousands of subjects while maintaining high photorealism. At the core, we introduce a MultiHeadUNet architecture, which takes sparse multi-view images in texture space as input and predicts Gaussian primitives represented as 2D texels on top of a human body mesh. Importantly, we represent sparse-view image information, body shape, and the Gaussian parameters in 2D so that we can design a deep and scalable architecture entirely based on 2D convolutions and attention mechanisms. At test time, our method synthesizes an articulated 3D Gaussian-based avatar from as few as four input views and a tracked body template for unseen identities. Our method excels over prior works by a significant margin in terms of cross-subject generalization capability as well as photorealism.

@article{zubekhin2025giga,

title={GIGA: Generalizable Sparse Image-driven Gaussian Avatars},

author={Zubekhin, Anton and Zhu, Heming and Gotardo, Paulo and Beeler, Thabo and Habermann, Marc and Theobalt, Christian},

year={2025},

journal={arXiv},

}