Live User-Guided Intrinsic Video For Static Scenes

IEEE Transactions on Visualization and Computer Graphics

(Presented at ISMAR 2017)

Abstract

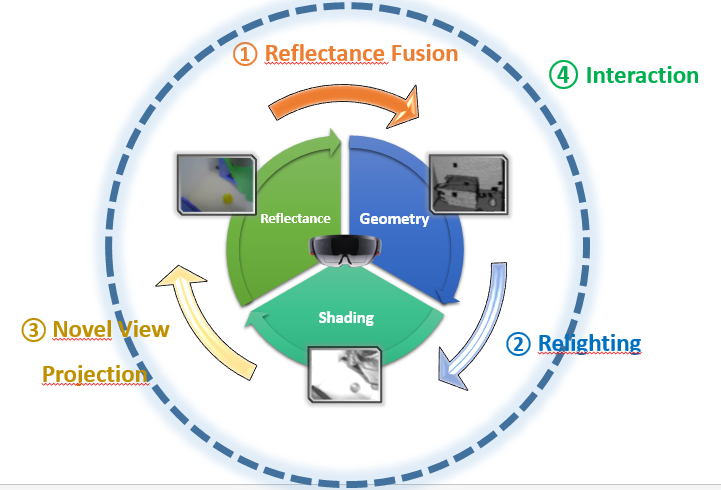

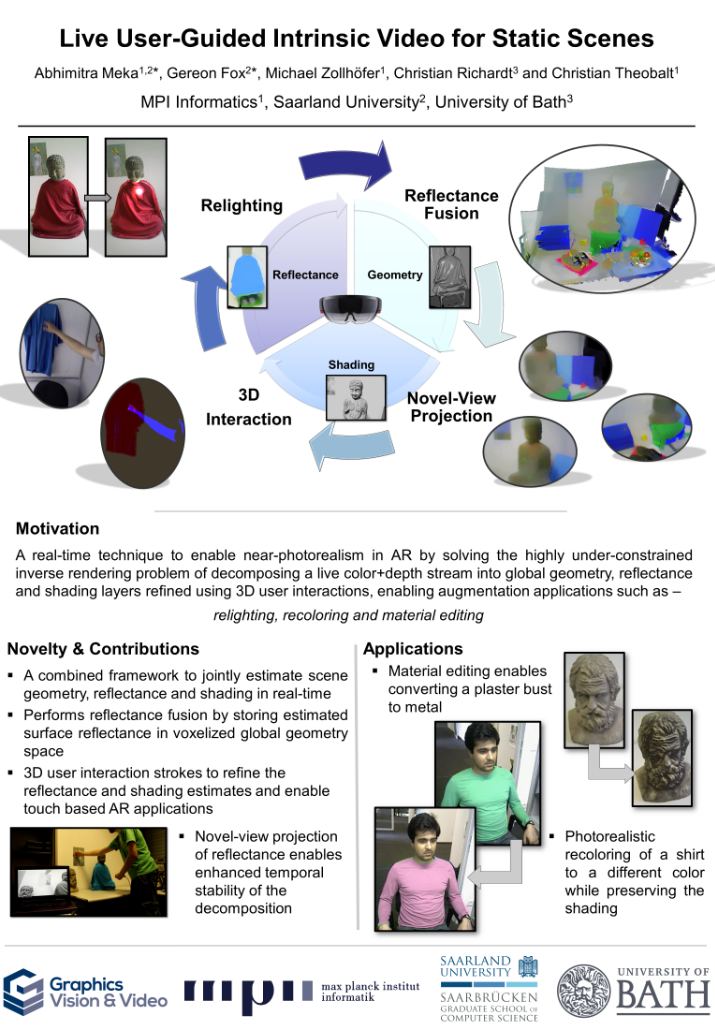

We present a novel real-time approach for user-guided intrinsic decomposition of static scenes captured by an RGB-D

sensor. In the first step, we acquire a three-dimensional representation of the scene using a dense volumetric reconstruction framework.

The obtained reconstruction serves as a proxy to densely fuse reflectance estimates and to store user-provided constraints in

three-dimensional space. User constraints, in the form of constant shading and reflectance strokes, can be placed directly on the

real-world geometry using an intuitive touch-based interaction metaphor, or using interactive mouse strokes. Fusing the decomposition

results and constraints in three-dimensional space allows for robust propagation of this information to novel views by re-projection.

We leverage this information to improve on the decomposition quality of existing intrinsic video decomposition techniques by further

constraining the ill-posed decomposition problem. In addition to improved decomposition quality, we show a variety of live augmented

reality applications such as recoloring of objects, relighting of scenes and editing of material appearance.

Downloads

Sequences and Results

[© Copyrights by the Authors, 2017. This is the author's version of the work. It is posted here for your personal use. Not for redistribution. The definitive version will be published in IEEE Transactions on Visualization and Computer Graphics.]

Citation

@article{meka:2017,

author = {Meka, Abhimitra and Fox, Gereon and Zollh{\"o}fer, Michael and Richardt, Christian and Theobalt, Christian},

title = {Live User-Guided Intrinsic Video For Static Scene},

journal = {IEEE Transactions on Visualization and Computer Graphics},

url = {http://gvv.mpi-inf.mpg.de/projects/InteractiveIntrinsicAR/},

year = {2017},

volume = {23},

number = {11},

keywords={Intrinsic video decomposition;reflectance fusion;user-guided shading refinement},

doi={10.1109/TVCG.2017.2734425},

ISSN={1077-2626},

month={NOVEMBER},}

Acknowledgments

This research was funded by the ERC Starting Grant CapReal (335545).