VNect: Real-time 3D Human Pose Estimation with a Single RGB Camera

Update: For errata regarding performance on MPI-INF-3DHP test set, please refer here.

Update: VNect Demo Model and Code Available For

Download as a C++ Library!

Abstract

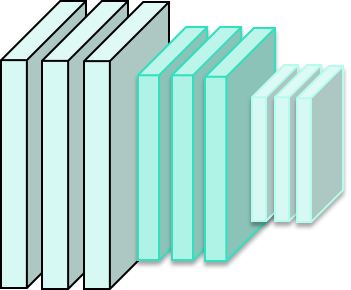

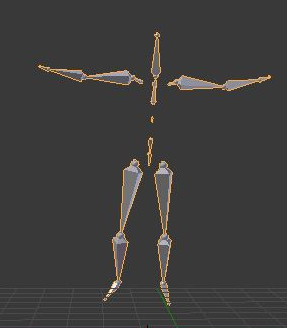

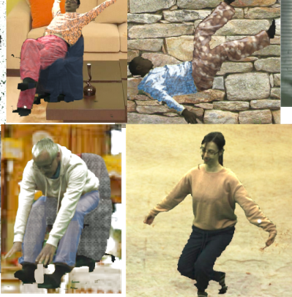

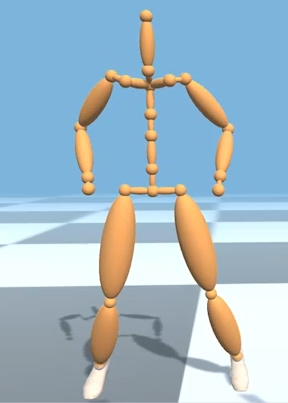

We present the first real-time method to capture the full global 3D skeletal pose of a human in a stable, temporally consistent manner using a single RGB camera. Our method combines a new convolutional neural network (CNN) based pose regressor with kinematic skeleton fitting. Our novel fully-convolutional pose formulation regresses 2D and 3D joint positions jointly in real time and does not require tightly cropped input frames. A real-time kinematic skeleton fitting method uses the CNN output to yield temporally stable 3D global pose reconstructions on the basis of a coherent kinematic skeleton. This makes our approach the first monocular RGB method usable in real-time applications such as 3D character control---thus far, the only monocular methods for such applications employed specialized RGB-D cameras. Our method's accuracy is quantitatively on par with the best offline 3D monocular RGB pose estimation methods. Our results are qualitatively comparable to, and sometimes better than, results from monocular RGB-D approaches, such as the Kinect. However, we show that our approach is more broadly applicable than RGB-D solutions, i.e., it works for outdoor scenes, community videos, and low quality commodity RGB cameras.

VNect Library (New!!)

The C++ Library (Windows only) comes bundled with the lastest, greatest, faster and better VNect demo network, along with the kinematic skeleton fitting stage, and configurable filtering for various stages of the pipeline. The hyperparameters of the various stages are configurable, and the motion tracking results can be exported to BVH. For further details refer to the documentation supplied with the library. For access, see GVV Assets Portal.

Downloads

Citation

@inproceedings{VNect_SIGGRAPH2017,

author = {Mehta, Dushyant and Sridhar, Srinath and Sotnychenko, Oleksandr and Rhodin, Helge and Shafiei, Mohammad and Seidel, Hans-Peter and Xu, Weipeng and Casas, Dan and Theobalt, Christian},

title = {VNect: Real-time 3D Human Pose Estimation with a Single RGB Camera},

journal = {ACM Transactions on Graphics},

url = {http://gvv.mpi-inf.mpg.de/projects/VNect/},

numpages = {14},

volume={36},

number={4},

month = July,

year = {2017},

doi={10.1145/3072959.3073596}

}

Contact

For questions and clarifications regarding access and details of the models/API, please get in touch with:Dushyant Mehta dmehta@mpi-inf.mpg.de

Oleksandr Sotnychenko osotnych@mpi-inf.mpg.de

You need to register with your institutional mail address, and provide your affiliation details and what you'd like to use the trained model for, to get access to the project assets. We only make the code, model, and data available for non-commercial research usage.