SceNeRFlow: Time-Consistent Reconstruction of General Dynamic Scenes

Abstract

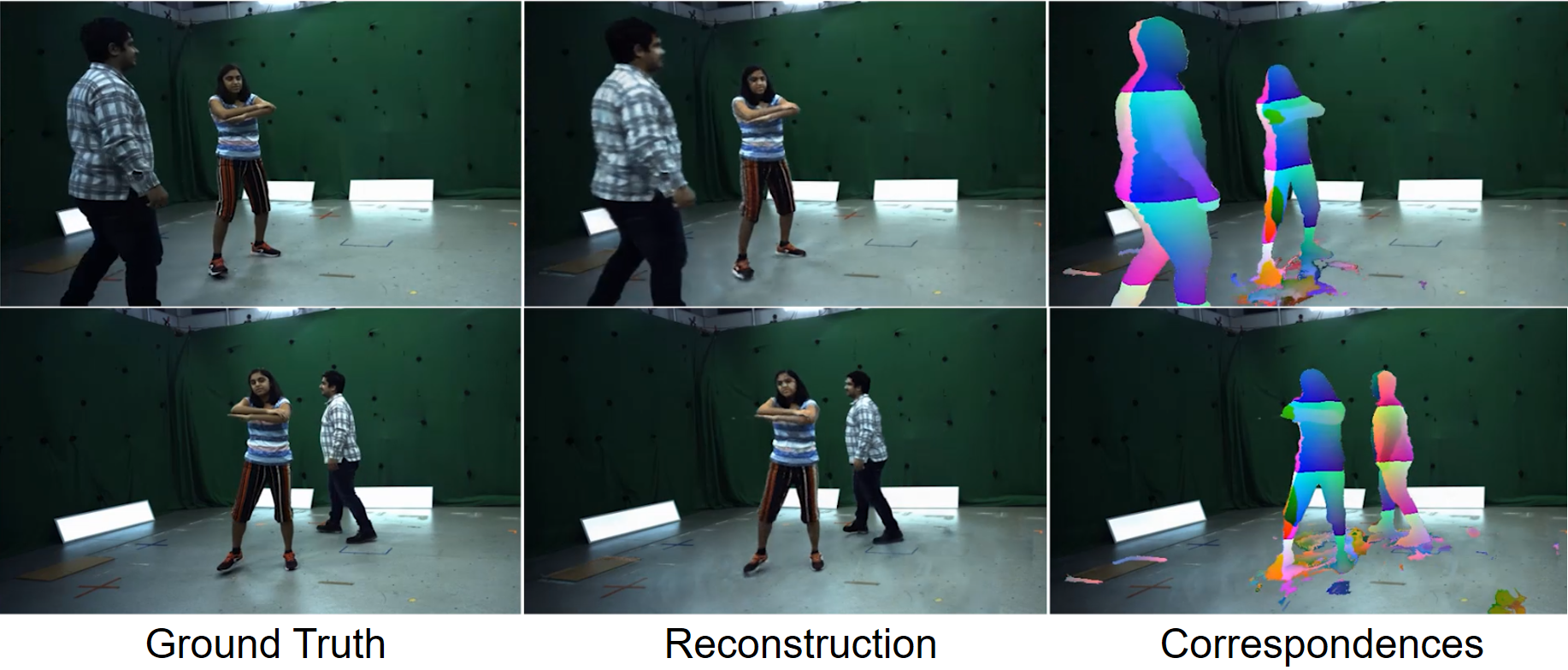

Existing methods for the 4D reconstruction of general, non-rigidly deforming objects focus on novel-view synthesis and neglect correspondences. However, time consistency enables advanced downstream tasks like 3D editing, motion analysis, or virtual-asset creation. We propose SceNeRFlow to reconstruct a general, non-rigid scene in a time-consistent manner. Our dynamic-NeRF method takes multi-view RGB videos and background images from static cameras with known camera parameters as input. It then reconstructs the deformations of an estimated canonical model of the geometry and appearance in an online fashion. Since this canonical model is time-invariant, we obtain correspondences even for long-term, long-range motions. We employ neural scene representations to parametrize the components of our method. Like prior dynamic-NeRF methods, we use a backwards deformation model. We find non-trivial adaptations of this model necessary to handle larger motions: We decompose the deformations into a strongly regularized coarse component and a weakly regularized fine component, where the coarse component also extends the deformation field into the space surrounding the object, which enables tracking over time. We show experimentally that, unlike prior work that only handles small motion, our method enables the reconstruction of studio-scale motions.

Teaser (8 MB)

Supplemental Video (86 MB)

Citation

@inproceedings{tretschk2024scenerflow,

title = {SceNeRFlow: Time-Consistent Reconstruction of General Dynamic Scenes},

author = {Tretschk, Edith and Golyanik, Vladislav and Zollh\"{o}fer, Michael and Aljaz Bozic and Lassner, Christoph and Theobalt, Christian},

year = {2024},

booktitle={International Conference on 3D Vision (3DV)},

}

Acknowledgments

All data capture and evaluation was done at MPII. Research conducted by Vladislav Golyanik and Christian Theobalt at MPII was supported in part by the ERC Consolidator Grant 4DReply (770784). This work was also supported by a Meta Reality Labs research grant.

Contact

For questions, clarifications, please get in touch with:Edith Tretschk tretschk@mpi-inf.mpg.de