Graphics, Vision & Video

Lightweight Binocular Facial Performance Capture under Uncontrolled Lighting

| Levi Valgaerts 1 | Chenglei Wu 1,2 | Andrés Bruhn 3 | Hans-Peter Seidel 1 | Christian Theobalt 1 |

| 1 MPI for Informatics | 2 Intel Visual Computing Institute | 3 University of Stuttgart |

| Abstract | Videos | Bibtex | Data Sets |

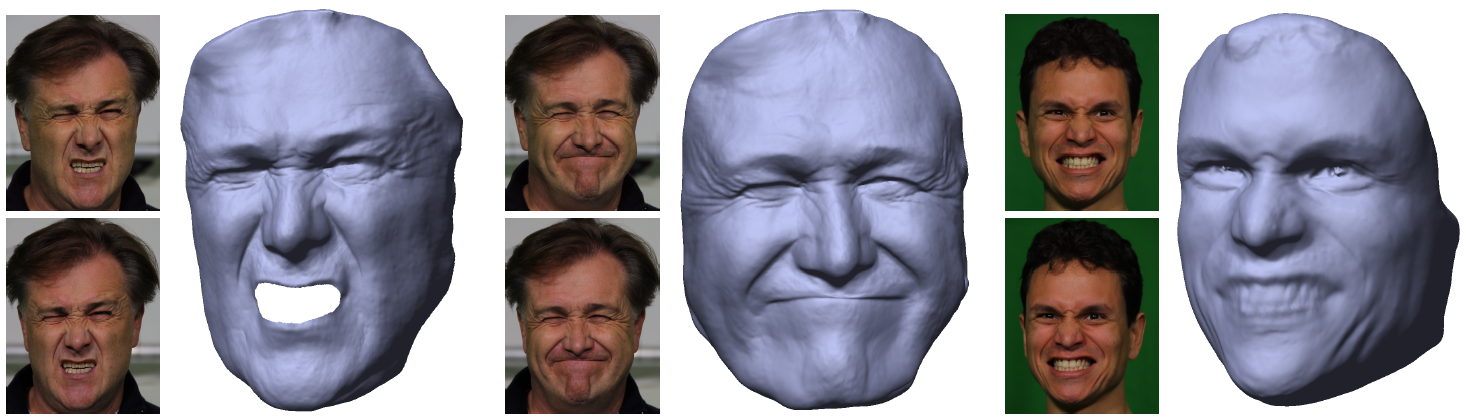

Recent progress in passive facial performance capture has shown impressively detailed results on highly articulated motion. However, most methods rely on complex multi-camera set-ups, controlled lighting or fiducial markers. This prevents them from being used in general environments, outdoor scenes, during live action on a film set, or by freelance animators and everyday users who want to capture their digital selves. In this paper, we therefore propose a lightweight passive facial performance capture approach that is able to reconstruct high-quality dynamic facial geometry from only a single pair of stereo cameras. Our method succeeds under uncontrolled and time-varying lighting, and also in outdoor scenes. Our approach builds upon and extends recent image-based scene flow computation, lighting estimation and shading-based refinement algorithms. It integrates them into a pipeline that is specifically tailored towards facial performance reconstruction from challenging binocular footage under uncontrolled lighting. In an experimental evaluation, the strong capabilities of our method become explicit: We achieve detailed and spatio-temporally coherent results for expressive facial motion in both indoor and outdoor scenes -- even from low quality input images recorded with a hand-held consumer stereo camera. We believe that our approach is the first to capture facial performances of such high quality from a single stereo rig and we demonstrate that it brings facial performance capture out of the studio, into the wild, and within the reach of everybody.

|

|

|

|

| Paper pdf (4.7M) / (65.2M) |

Supplementary Material pdf (38.3M) |

Video avi (126.9M) |

Presentation pptx (123.8M) |

| Supplementary video to the paper |

| Additional video showing a result for 560 frames (around 22 seconds) |

@inproceedings{VWBS12,

|

We make the data shown in the following video available on request.

It consists of 200 frames of a stereo sequence captured at 25 fps (around 8 seconds) and the corresponding textured spatio-temporally coherent 3D reconstructions. As mentioned in the paper, synchronisation of the left and right video sequence is performed by event, which makes it sub-frame accurate at best. The face meshes have a resolution of 500K vertices, which is 5 times higher than the results shown in the paper and the supplementary video. The algorithm used to obtain these results is the one described in the paper, with the higher resolution only improving the captured detail.

Terms of use: The provided data is intended for research purposes only and any use of it for non-scientific means is not allowed. This includes the publishing of any scientific results obtained with our data in non-scientific literature, such as tabloid press. We ask the researcher to respect our actors and not to use the data for any distasteful manipulations (such as hideous deformations, exploding heads, manipulations that might be culturally sensitive,...). We also ask the researcher not to disseminate this data outside of his or her institute; distribution within the affiliated institution is allowed.

Requesting the data: Please understand that we can only make the data available to senior project managers or senior researchers. To keep track of researchers and institutions requesting the data and to ascertain that you abide by the above terms of use, we make the data available after sending an email to facecap-at-mpi-inf.mpg.de stating the following:

|